Fig. 1.1 (icecap.us)

Part 1

1. The past decade (11/11)

2. The past 30 years: satellite records (11/11)

3. The past 150 years: surface record (02/09)

4. The past 3000 years: proxy wars

5. Climate change over geologic time

Part 2

6. IPCC bias

7. CO2 and H2O

8. Oceans and geotectonics

9. Solar influences

10. Hype, hysteria, heresy

11. Sources

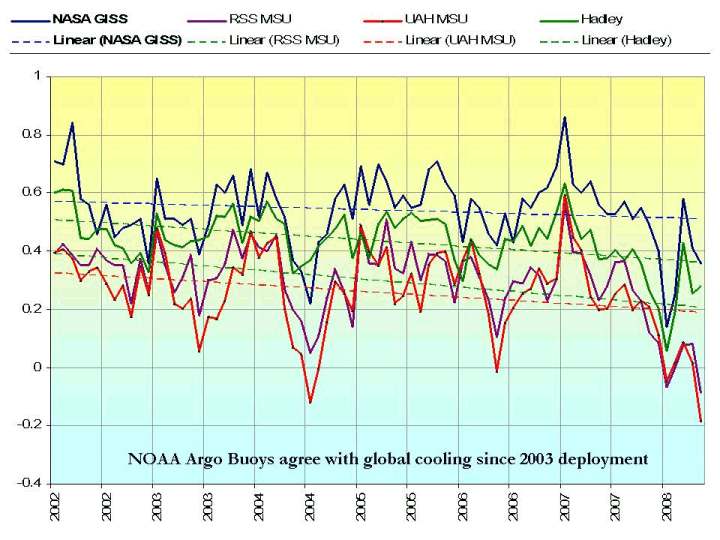

Global temperatures peaked in 1998, the year of a very strong El Niño, which was neither caused by global warming nor predicted by climate models. Since then, CO2 levels have risen sharply (by over 4%), yet global temperatures have remained essentially flat. Since late 2001 all the four major official sources of global temperature data show a slight cooling trend (fig. 1.1).

Fig. 1.1 (icecap.us)

Proponents of AGW claim that in recent years ‘man-made warming’ has been offset by ‘natural factors’, such as ocean cycles entering a cool phase. In other words, they admit that natural variability can more than cancel the alleged man-made warming, even though natural climate variability tends to be downplayed in IPCC reports. However, they draw the line at reexamining the assumptions underlying the large amount of CO2-induced warming calculated by climate computer models, which project continuously rising temperatures for the next century.

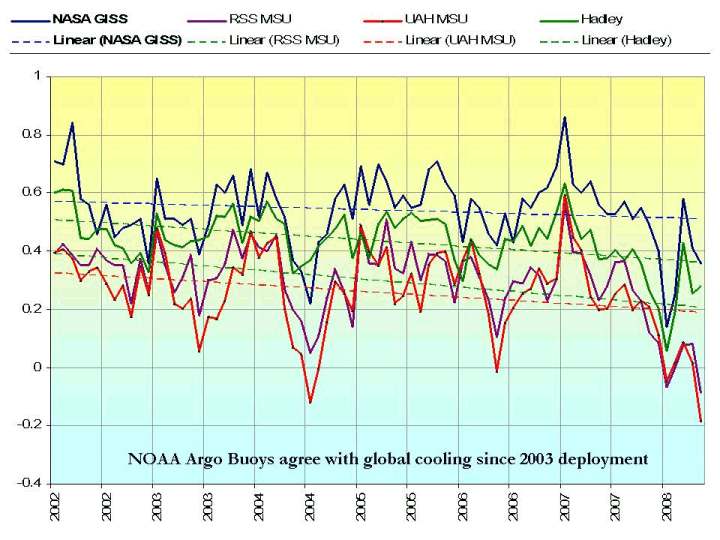

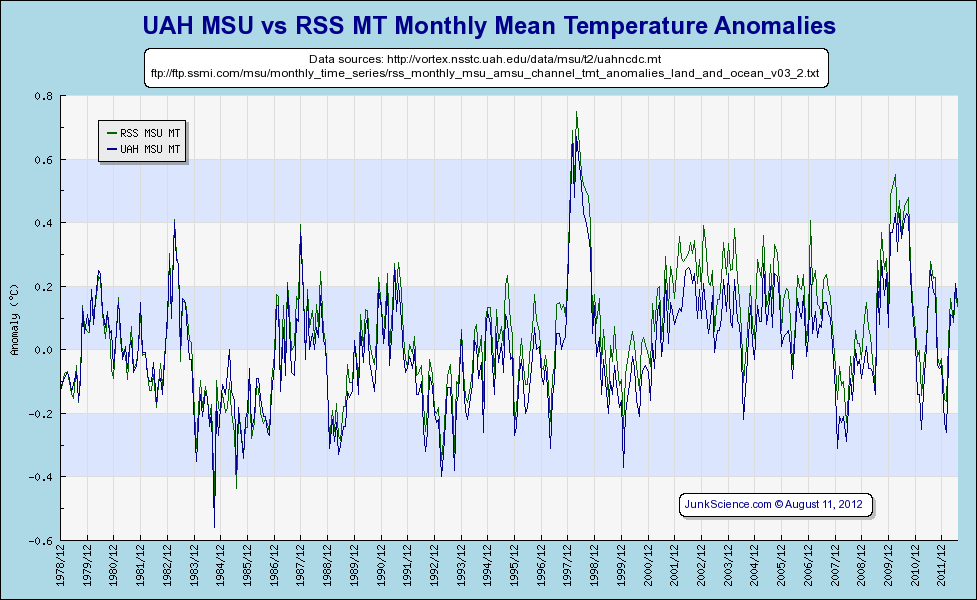

Fig. 1.2 IPCC temperature projections versus reality. The blue and green curves are actual temperatures as measured by ground-based (HadCRUT) and satellite (UAH LT) monitoring systems. The yellow curve shows how much warming would supposedly occur even if CO2 concentrations stopped increasing. The red, orange, and brown curves are model forecasts of global temperatures under different emission scenarios. (icecap.us) (updated graph: climaterealists.com)

Opinions differ on how much longer the present temperature dip will last. The UK Met Office has predicted that anthropogenic global warming will resume in 2009, and that at least half the years between 2009 and 2014 will be warmer than the record set in 1998. But a team of scientists recently forecast in Nature that cooling could continue for another 10 years, ‘as natural climate variations in the North Atlantic and tropical Pacific temporarily offset the projected anthropogenic warming’. Some AGWers argue that ‘80% to 90% of global warming involves heating up ocean waters’, and are puzzled by the fact that data from over 3000 Argo floats in the world’s oceans show there has been slight cooling in the past five years. They are convinced, however, that this is just a temporary blip. Some AGW critics think we might be heading for a long-term cooler period, especially since the Pacific Decadal Oscillation, which has been in a warm phase in 1977, switched back to a cool phase in April 2008, and solar activity is set to weaken over the next couple of decades (sunspot cycles 24 and 25).

The debate on the precise nature and relative importance of human and natural factors in climate change is still very much alive, despite the commonly heard claim that ‘the debate is over’ and ‘the science is settled’. In 2003 a poll of 530 climate scientists in 27 countries found that two-thirds did not believe that ‘the current state of scientific knowledge is developed well enough to allow for a reasonable assessment of the effects of greenhouse gases’. About half of those polled felt that the science of climate change was not sufficiently settled to pass the issue over to policymakers (Bray & Storch, 2007). The US Senate Environment and Public Works Committee has posted a list of over 650 ‘dissident’ climate scientists from all over the world. Over 31,000 US scientists have signed the Oregon Institute of Science and Medicine’s petition rejecting the idea that global warming is mainly caused by human activity. In a recent survey of more than 51,000 scientists affiliated with the Association of Professional Engineers, Geologists and Geophysicists of Alberta, Canada, 68% of respondents disagreed with the statement that ‘the debate on the scientific causes of recent climate change is settled’, and only 26% attributed global warming to ‘human activity like burning fossil fuels’.

Ultimately, of course, it’s not the number of scientists supporting this or that point of view that counts – it’s the soundness of the underlying science.

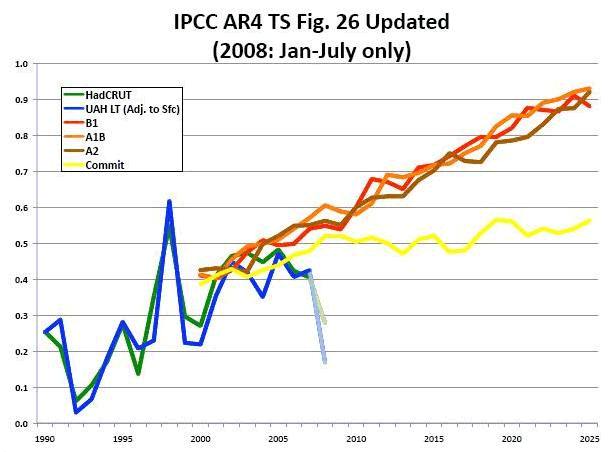

Earth-orbiting satellites have been recording global temperature data since 1979. In contrast to ground-based temperature measurements, satellite measurements cover the entire planet (except for small regions near the poles). There are two sources of data: UAH (University of Alabama at Huntsville) and RSS (Remote Sensing Systems). The data from these sources agree very closely, and show that present temperatures are barely above the 30-year average, despite a 17% increase in atmospheric CO2 over this period.

Fig. 2.1 (junkscience.com)

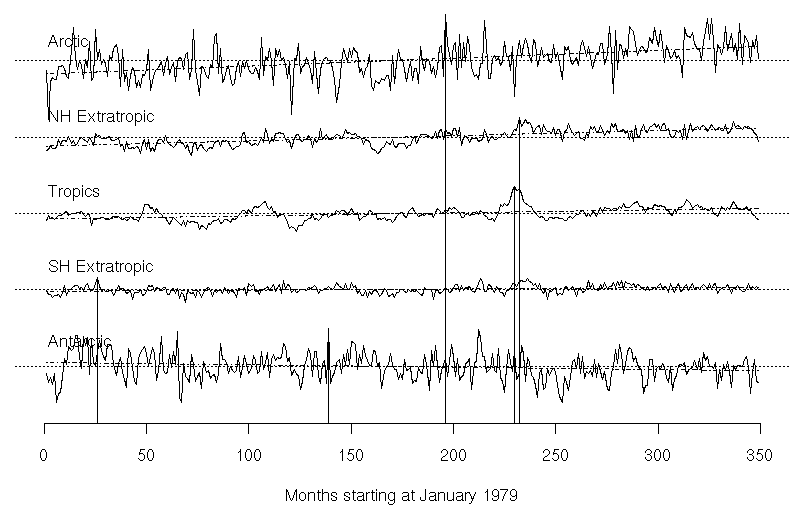

Fig. 2.2 shows data from January 1979 to January 2008 for different swaths of the earth: the north polar region, the northern hemisphere outside the tropics, the tropics, the southern hemisphere outside the tropics, and the south polar region. The horizontal dashed line shows the 30-year mean. Vertical lines show when the maximum temperature occurred in each region: in 1998 for NH Extratropic and Tropics, in 1995 for the Arctic, in 1990 for the Antarctic, and in 1981 for the SH Extratropic. The straight, dash-dotted lines are simple regression lines, i.e. the best-fit, overall trend lines. Clearly, most of the warming has occurred in the northern hemisphere, with slight cooling at the south pole.

Fig. 2.2 (wmbriggs.com)

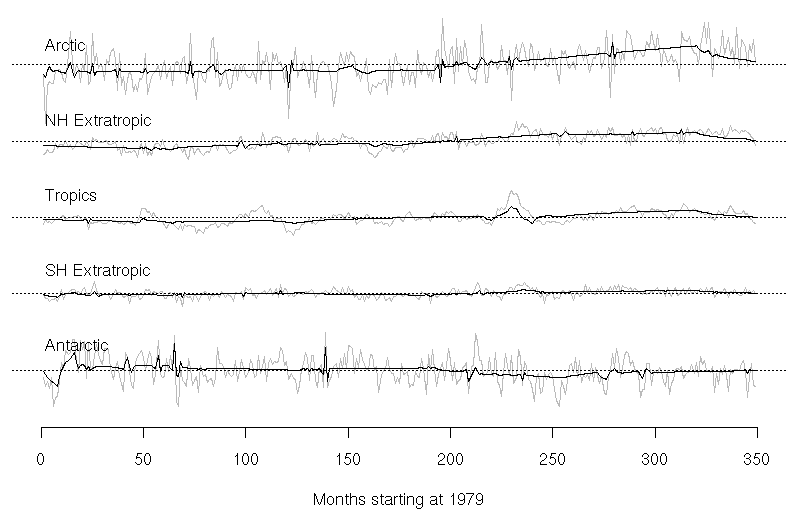

Statistician William Briggs (2008) has analysed these graphs further and shows that simple trend lines are inadequate as they fail to capture a semi-periodic signal that is clearly present in the data. He applies a different method of smoothing the data, known as wavelet analysis, which highlights the fact that temperatures are not increasing linearly across the globe (fig. 2.3).

Fig. 2.3

The instrumental temperature record goes back to about 1850. The overall temperature trend has been upwards since then. The earth warmed strongly between 1915 and 1940, cooled somewhat between 1940 and 1975, and then warmed strongly again between 1975 and 1998. Since the El Niño temperature spike in 1998, global temperatures have flatlined. In its 2007 Fourth Assessment Report the IPCC concedes, in contrast to earlier reports, that the pre-1940 warming was mainly caused by natural factors, such as solar variability.

CO2 concentrations began to rise rapidly after the second world war, yet temperatures did not. The cooling from 1940 to 1975 generated a ‘global cooling’ scare and fears of ‘catastrophic famines’ and a new ice age . Attempts have been made to explain this cooling period as the effect of sulphate aerosols from the burning of sulphur-containing fuels. However, such burning did not suddenly begin in 1940 or diminish in 1970. Others blame volcanic activity, which injects sunlight-reflecting dust into the troposphere. But the effect of an extremely large eruption normally lasts 1 to 3 years, not 30 years. Moreover, the only big eruption in the 1940-70 period took place in Indonesia in 1963 – which fails to explain the drop in temperature from 1942 to 1952 (Kauffman, 2007).

Both NASA’s Goddard Institute for Space Studies (GISS) and the UK Met Office’s Hadley Climate Research Unit (CRU) provide long-term surface temperature data – known as GIStemp and HadCRUT respectively. These data are derived from a network of ground-based thermometers (or electronic sensors). The CRU’s data includes measurements of sea surface temperatures.

Fig. 3.1 The CRU’s graph of surface temperatures for the period 1850 to December 2008.

(cru.uea.ac.uk)

Fig. 3.2 NASA’s graph of surface temperatures for the period 1880 to January 2009.

(data.giss.nasa.gov)

Both these data sources show a steeper warming trend than the satellite measurements for the post-1979 period.

Potential errors

Strictly speaking, ‘global temperature’ is not a physically meaningful quantity; there are only local temperatures, ranging at any given time from about -80°C to +40°C, which are then averaged to produce a ‘global’ figure. This average is then regarded as a temperature itself, ‘as if the out-of-equilibrium climate system has only one temperature’. But ‘The temperature field of the Earth as a whole is not thermodynamically representable by a single temperature’, and tiny trends in average temperature provide ‘no basis for concluding that the atmosphere as a whole is either warming or cooling’ (Essex et al., 2007). Roger Pielke & Thomas Chase argue that ‘Changes in global heat storage provide a more appropriate metric to monitor global warming than temperature alone’.

Note that even local average temperatures are generally unknown. At most weather stations there is only one temperature measurement a day. Some stations use a maximum and minimum thermometer, and the mean of the daily maximum and daily minimum temperature is then considered to be an average. Modern statistics, however, does not recognize such an ‘average’, which can depart significantly from a genuine average, derived from, say, hourly readings. William Gray (2008b, pp. 5-6) reports:

if you compare this [max/min] average with the average of the 24 hourly readings from one midnight to another, you get a large bias, which for the average of 24 New Zealand weather stations was +0.5ºC for a typical summer day with a range of +2.6ºC to -0.4ºC and an average of +0.9ºC with a range of +1.9ºC to -0.9ºC for a typical winter day. The positive bias of the max/min average over the mean hourly value can thus be larger than the claimed effects of greenhouse warming.

Other potential sources of error in ground-based measurements include contamination by urban heating effects (due to the replacement of vegetation with heat-absorbing asphalt and concrete), disproportionate concentration of thermometers in urban areas, changes in instrumentation, changes in station and instrument locations, loss of stations, missing monthly data, and changes in the time of day when thermometers are read.

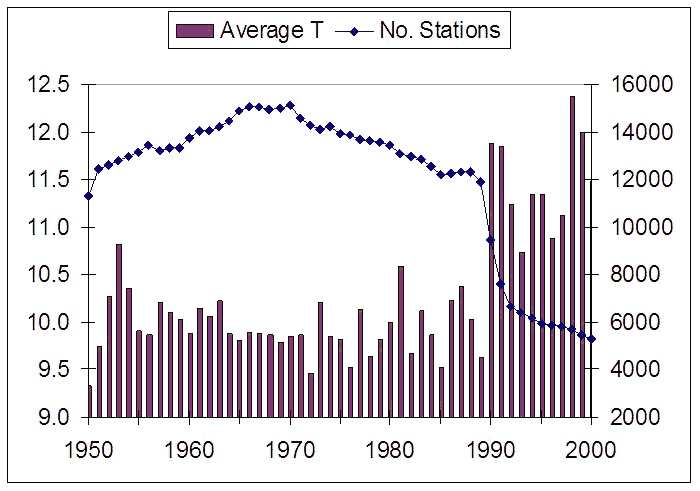

The number of weather stations worldwide has fallen sharply in the past two decades. When the Soviet Union collapsed in the early 1990s, more than half the world’s weather stations were closed in just four years, which means that today’s average can’t really be compared with that from the 1980s. Commenting on the decline in the number of ground stations since the 1970s, especially in Siberia, Fred Singer (2008, p. 8) writes:

Ideally, the models require at least one measuring point for each 5 degrees of latitude and longitude – 2,592 grid boxes in all. With the decline in stations, the number of grid boxes covered also declined – from 1,200 to 600, a decline in coverage from 46 percent to 23 percent. Further, the covered grid boxes tend to be in the more populated areas.

Fig. 3.3 This graph shows the number of weather stations around the world and the simple mean of the temperature data. (uoguelph.ca/~rmckitri/research/nvst.html)

Sea surface temperature measurements have even poorer coverage and are far more dubious than those on land, as the measurement locations, instruments and conditions are highly variable. Originally, these measurements were made by collecting water in canvas buckets. In the 1960s measurements were taken much deeper in the water, in the pipe that draws in water to cool the ship’s engines; this method yields temperatures 0.3ºC to 0.7ºC higher than the earlier method. In the 1980s data were obtained from drifting and moored buoys and transmitted by satellite. And since the 1970s satellites have acquired data from the very top layer of the surface. Given the unreliability of historical sea surface temperatures (SSTs), NASA ignores them altogether – and with them 71% of the earth.

Temperature data are ‘corrected’ in all sorts of ways, but the authorities that make the adjustments have failed to document exactly what they are doing, often refusing outright to archive data and methodological procedures (see below).

Urban heat

In its Fourth Assessment Report (AR4), the IPCC (2007, ch. 3) states that ‘Global mean surface temperatures have risen by 0.74°C ± 0.18°C when estimated by a linear trend over the last 100 years (1906-2005)’. It says that urban heat island effects are real but local, and claims that they have a negligible influence (less than 0.006°C per decade over land and zero over the oceans). However, 0.006°C per decade amounts to 0.06°C per century, which is nearly one-tenth of the entire warming in the 20th century. Moreover, numerous studies (e.g. Pielke et al., 2007; McKitrick and Michaels, 2007a) have contradicted the claim that urbanization has been properly adjusted for, and have shown that surface temperature data are still seriously contaminated by urban heat island effects, implying that the warming trend is lower than NASA and the IPCC claim, and more in line with satellite-based trends for the past 30 years.

In AR4, the IPCC cites an influential article by Peterson (2003) to support its claim that the impact of urbanization is insignificant. However, after analyzing Peterson’s data, statistics expert Steve McIntyre concluded that ‘actual cities have a very substantial trend of over 2 deg C per century relative to the rural network – and this assumes that there are no problems with the rural network – something that is obviously not true since there are undoubtedly microsite and other problems’.

Fig. 3.4 Temperature trends of major US city sites and rural sites. (climateaudit.org/?p=1859)

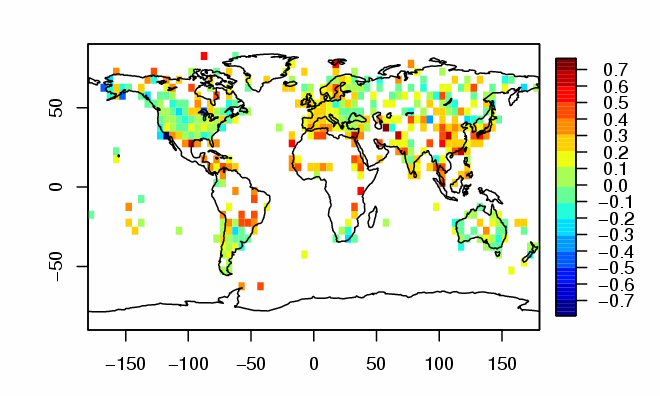

Ross McKitrick & Pat Michaels (2004) showed that the adjustments made to raw temperature data were not properly removing contamination caused by factors such as urbanization and loss of stations. They estimated that if the contamination were removed, the average measured warming rate over land would decline by about half. Using different data and methods, Jos de Laat & Ahilleas Maurellis (2006) also demonstrated a statistically significant correlation between the spatial pattern of warming in climate data and the spatial pattern of industrial development.

In the first draft of its Fourth Assessment Report, the IPCC simply claimed that, while city data are distorted by urban warming, this does not affect the global averages, and ignored all evidence to the contrary. Ross McKitrick was one of the reviewers of the draft report and submitted detailed criticisms. Since the second draft did not contain any discussion either, he again submitted lengthy comments. In the final version, the IPCC referred to the above articles, but claimed that, due to unspecified ‘atmospheric circulation changes’, ‘the correlation of warming with industrial and socioeconomic development ceases to be statistically significant’ – a claim that it failed to back up with any evidence whatsoever. As McKitrick & Michaels (2007b) say, ‘The technical term for this is “making stuff up.” ’ Furthermore, the IPCC’s claim that the correlation is due to natural circulation changes ‘contradicts its later (and prominently advertised) claims that recent warming patterns cannot be attributed to natural atmospheric circulation changes’.

In a recent follow-up study, McKitrick and Michaels (2007a) present a larger dataset with a more complete set of socioeconomic indicators. They showed that the spatial pattern of warming trends is so tightly correlated with indicators of economic activity that the probability they are unrelated is less than 1 in 14 trillion. They also showed that the contamination patterns are largest in regions experiencing real economic growth, and account for about half the surface warming measured over land since 1980.

Fig. 3.5 Bias of IPCC temperature data. Each square is colour-coded to indicate the size of the local bias. Blank areas indicate that there was no data available. (McKitrick & Michaels, 2007a)

US station sites: warming biases

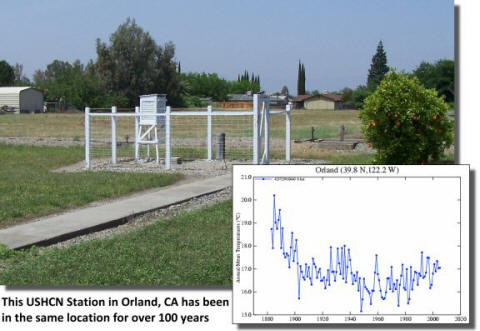

The National Oceanic and Atmospheric Administration (NOAA) is responsible for the operation, documentation and upkeep of the weather stations belonging to the US Historical Climatology Network (USHCN). But by 1997, despite concerns about the deteriorating quality of the climate measuring network, it had not even begun to compile a photographic database of the stations in the network. A grassroots effort was therefore launched, spearheaded by retired meteorologist Anthony Watts, to photograph and document all 1221 USHCN climate stations (see surfacestations.org).

As of 5 January 2009, 737 stations had been surveyed, and the results confirm the poor state of the network. Over time, many ground stations have found themselves in increasingly unsuitable surroundings. Watts writes:

There have been instances recorded of air conditioners being located directly adjacent to the thermometer, vehicles parked next to thermometers head-in, heat generating electronics and electrical components being placed in the thermometer shelters within inches of the sensor, and sensors being located in the middle of large areas of asphalt/concrete and directly attached to buildings all in violation of standard published NOAA practices for temperature measurement. (surfacestations.org/faqs.htm)

19% of the stations surveyed have an estimated warming bias of 1-2°, 57% of 2-5°, and 12% of at least 5°.

Fig. 3.6 An example of a well-sited and well-maintained station.

The graph shows temperatures from 1880 to the present.

Fig. 3.7 This site in Marysville, California, has been around for about

the same amount of time, but has been encroached upon by growth,

producing a warming bias so large that the site is useless.

NASA’s adjustments

In 2007, Steve McIntyre noticed something odd about the year 2000 in NASA’s GISS temperature record for the US, and asked to see the computer code. NASA refused, but by analysing datasets, McIntyre was able to locate the error and notified NASA in August 2007. He again asked for access to the code but his request was again turned down. NASA corrected the error on its website within two days, but without acknowledging McIntyre (climateaudit.org/?p=1854; climateaudit.org/?p=1868). The correction reduced the post-2000 temperature anomalies by 0.15°C. This is a substantial error as the GISS data show a rise of only about 0.5°C for the US as a whole over the last century. As a result, 1934 has replaced 1998 as the warmest year of the 20th century for the US, and the 1930s are now the warmest decade.

Fig. 3.8 US temperature anomalies, 1880 to Jan. 2009. (data.giss.nasa.gov)

A spin-off of McIntyre’s intervention was that NASA administrators instructed James Hansen, the Director of NASA’s Goddard Institute for Space Studies (GISS), to archive the computer code, which was done in September 2007. McIntyre says that the code is awful and so poorly documented that it is virtually incomprehensible (in breach of NASA software policies). An analysis showed that some of Hansen’s data adjustments are ‘breathtakingly bizarre’ and that his methodology ‘seems demented’ (climateaudit.org/?p=3201).

NASA uses data from the US Historical Climatology Network (USHCN) and the Global Historical Climatology Network (GHCN). Fig. 3.9a shows the raw unadjusted USHCN temperature trends for the US in the 20th century: more than half the country shows declining temperatures (blue and green). Fig. 3.9b shows that after the adjustments the country turns noticeably warmer (red and yellow).

Fig. 3.9 20th-century US temperatures: before adjustment (top) and after adjustment (bottom). (www.climateaudit.org)

It has been established that in many cases NASA has adjusted more recent temperatures upwards and earlier temperatures downwards, thereby producing a steeper warming trend. At several sites in Peru, for example, mid-20th-century temperatures have been lowered by as much as 3°C!

Fig. 3.10 Adjusted and unadjusted temperature curves from three Peruvian weather stations:

Puerto Maldonado, Pucallpa, and Cuzco. The past seems to be cooling! (climateaudit.org/?p=2793)

Fig. 3.11 Temperature curves for two New Zealand weather stations: Wellington (left) and Auckland (right). Blue = unadjusted, red = adjusted. The raw temperature data show essentially no warming, but the adjusted data do. (appinsys.com/GlobalWarming/RS_NewZealand.htm)

Steve McIntyre has discovered that out of the 7364 stations in the GISS worldwide network, 47% are adjusted, and 45% of these adjustments are ‘negative urban adjustments’, i.e. adjustments that make the warming trends steeper. The temperature trend at an urban station is adjusted upwards if a nearby rural station has a steeper trend. A serious problem is that the population database used to classify many sites as rural is out of date, and there are stations at cities with populations well over 10,000 that are incorrectly classified as rural. In Peru, one ‘rural’ station is located in a city with a population of 400,000. McIntyre comments that in such cases, ‘the GISS “adjustment” ends up being an almost completely meaningless adjustment of one set of urban values by another set of urban values. No wonder these adjustments seem so random’ (climateaudit.org/?p=2798). In short, the urban adjustment is supposed to remove the effects of urbanization, but NASA’s negative adjustments actually make the situation worse.

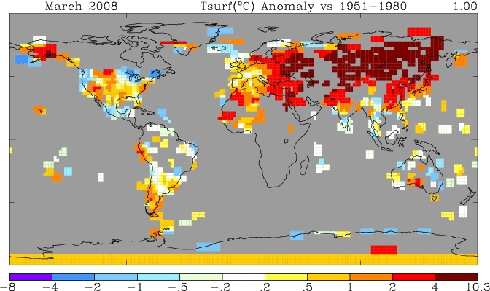

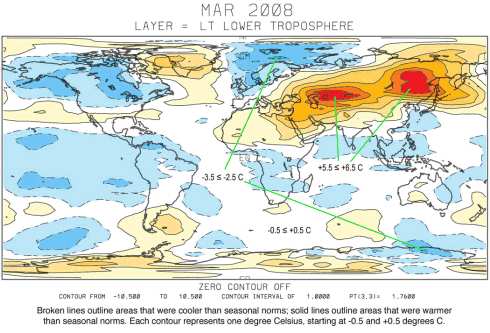

In March 2008, NASA reported temperature anomalies more than 0.5° warmer than UAH (see climateaudit.org/?p=2964). NASA temperatures indicate that March 2008 was the third warmest March on record, whereas UAH and RSS satellite data indicate that it was the second coldest on record in the southern hemisphere, and barely above average worldwide. NASA’s map for March (fig. 3.12) shows that it has essentially no data (gray areas) for most of Canada, most of Africa, the Greenland ice sheet, and most of Antarctica. As shown in the UAH satellite map (fig. 3.13), the missing areas in Canada and Africa were cold (Goddard, 2008b). In other words, the NASA data is disproportionately weighted towards warm areas, especially in the northern hemisphere. It is worth noting that NASA uses a relatively cool period (1951-1980) as its baseline, thereby making all recent temperatures relatively warm. Note that even though NASA has no data from 20% of the earth’s land area and uses none from the oceans it still reports global temperature measurements to a hundredth of a degree.

Fig. 3.12 NASA temperatures for March 2008 make it look hot.

Fig. 3.13 UAH satellite temperatures for March 2008 make it look cool.

In 1992 NASA stopped collecting data from numerous rural sites all over the world, including sites in Australia, Russia, China, and South America. Yet data is still being gathered at many of these locations and is readily available. For example, over 380 Australian stations – 90% of them rural – were dropped from the GISS network in 1992 alone, even though most are still operational (climateaudit.org/?p=2703). Steve McIntyre remarks: ‘You can get data on the internet that NASA hasn’t been able to locate. It’s pathetic.’

Whether the UK’s Climate Research Unit (CRU) does a better job of calculating the global temperature than NASA is difficult to say, as it refuses to provide either the data or its computer code. As far as is known, it makes no correction for the urban heat island effect, which it claims to be insignificant.

Commenting on NASA’s temperature adjustments, Stephen Goddard (2008a) says:

Satellite data shows temperatures near or below the 30 year average – but NASA data has somehow managed to stay on track towards climate Armageddon. You can draw your own conclusions, but I see a pattern that is troublesome. In science, as with any other endeavour, it is always a good idea to have some separation between the people generating the data and the people interpreting it.

James Hansen, the person in charge of NASA’s temperature data, doesn’t bother responding to his critics because he says he’s not interested in ‘jousting with jesters’. Steve McIntyre quips: ‘I guess he wants center stage all to himself for his own juggling act.’

Hansen happens to be one of the world’s most vocal advocates of the catastrophic greenhouse warming doctrine, and science advisor to fellow-evangelist Al Gore. In a speech to Congress in June 1988, Hansen was one of the first to sound the alarm about global warming: he said that evidence of the greenhouse gas effect was 99% certain, and that ‘it is time to stop waffling’. To mark the 20th anniversary of this event, Hansen delivered a speech in June 2008 in which he called for oil executives who spread ‘misinformation’ about global warming to be tried for ‘crimes against humanity and nature’.

We might wonder whether this should also apply to Hansen’s own misinformation and alarmist scaremongering. In 1988 Hansen thought the world would be about 0.8°C warmer than the 1951-80 average by 2007 – a third more warming than has been observed. In fact, according to his own (GISS) temperature data, the global temperature anomaly in 1988 was +0.50°C, whereas in June 2008 – due to the recent fall in temperatures – it was +0.35°C. So after 20 years of ‘dangerous’ global warming, the temperature was actually slightly cooler than when Hansen made his ‘groundbreaking’ speech. What a farce!

From hockey stick to spaghetti

One of the IPCC’s most serious blunders was its promotion of the infamous ‘hockey stick’ graph in its 2001 Third Assessment Report. The graph was a reconstruction of northern hemisphere climate by Michael Mann and his colleagues Bradley and Hughes, first published in 1998/99, which purported to show that northern temperatures had been declining very slowly for a thousand years, before shooting up at an alarming pace in the 20th century. It was used to justify the claim that the 20th century was the warmest in the past 1000 years, and to dismiss the Medieval Warm Period as no more than a local phenomenon, despite its being attested to by historical records and archaeological data from around the world.

In a series of papers, Steve McIntyre and Ross McKitrick demonstrated that the hockey stick graph was a thoroughly sloppy piece of work containing major statistical and procedural flaws, such as ‘calculation errors, data used twice, use of wrong data and a computer program that generated a hockey stick out of even random data’. Their conclusions were upheld in 2006 by an expert committee of the National Research Council of the US National Academy of Sciences and by a congressional committee headed by leading statistician Edward Wegman (see McIntyre, 2007; The global warming scare, section 3).

Fig. 4.1 The discredited hockey stick. The long, flat shaft of the hockey-stick-shaped graph is mostly reconstructed from tree-ring proxy data and the almost upright blade represents the instrumental temperature record. No one – journal editors and reviewers, other climate scientists, or the IPCC – had made any attempt to check the hockey stick until McIntyre and McKitrick came along. And then Mann initially did everything he could to obstruct them by refusing to disclose vital information.

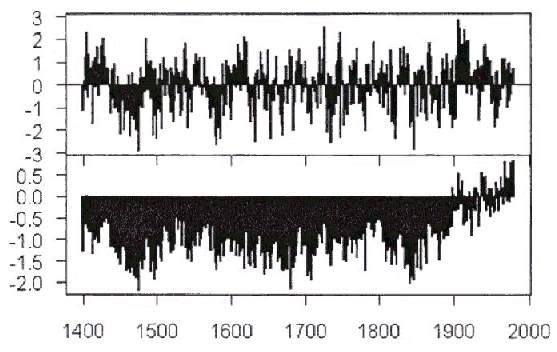

Fig. 4.2 Top panel: The simple average of the 415 proxy series in Mann’s dataset looks nothing like a hockey stick, and doesn’t even slope upward in the 20th century. Bottom panel: The hockey-stick shape emerged solely as a result of the way the data were averaged. Mann overweighted a small set of tree-ring data from bristlecone pines in the western USA, which had long been viewed as unreliable indicators of historical temperature. (McKitrick, 2007b)

Steve McIntyre believes there is a good chance that Mann’s hockey stick will become a classic example in statistics texts of how not to do things. But for years, the hockey stick was the main weapon used by alarmists to spread fear of an imminent climate catastrophe, and it continues to be used as a propaganda tool to this day. It was featured, for example, in Al Gore’s film and book, An Inconvenient Truth – which have been described as ‘climate porn’. James Peden (2008) describes some of the dramatic scenes Gore treats us to:

The hockey stick goes up on the big screen, and Gore boards a mechanical lift with cameras grinding, pointer in hand as he rises in unison with the blade of the stick which starts growing upward toward the ceiling. No longer are we talking about tenths of a degree, the temperature is rising like a puff pastry, and headed toward the attic. It all began with the word ‘if’. If the hockey stick tip continues to rise (lift starts going upward, the audience holds its breath) then... and along comes computer animations of New York flooding, Florida underwater, and poor little Polar Bears struggling to board the last piece of ice floating in the open Arctic Sea. (sigh ...) [...] It is Hollywood at its finest, and the Deacons of La La Land give it an Oscar. Even the Nobel Committee is impressed, gives it two thumbs-up and a Nobel Prize to Gore and the other members of the IPCC for the many lives that will be saved in the future because of this brilliant early warning. And, there’s still time for we miserable humans to ‘save’ the planet by buying ‘carbon offsets’ accomplished best by investing in Al Gore’s British company which buys stock in other companies that will benefit from a world-wide global warming hysteria [...]

Peden says that while Gore’s film ‘has serious students of climate change laughing their heads off, the British didn’t think it was very funny’. In 2007 a UK High Court judge found that it contained nine serious errors and that it would be unlawful to distribute it to all UK secondary schools unless it was accompanied by a list of corrections, as otherwise it would contravene legislation banning the political indoctrination of children. The judge only spoke of 9 errors, because the British government had already admitted that the statements in question were dubious. Christopher Monckton (2007b) details 35 inaccuracies and exaggerations in the movie, and Marco Lewis (2007) dissects Gore’s book line by line, identifying 99 distortions.

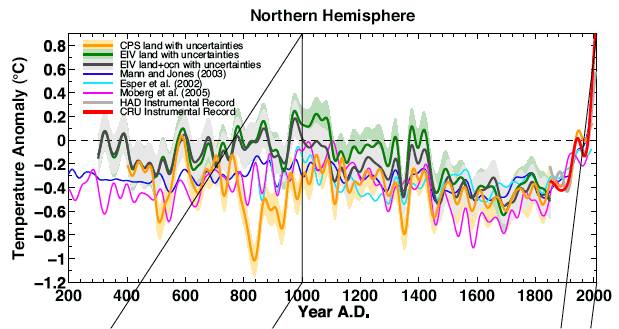

The hockey stick was featured six times in the IPCC’s Third Assessment Report (TAR). The Fourth Assessment Report (AR4) incorporates the hockey stick curve as one of several climate reconstructions in a ‘spaghetti’ diagram (2007, fig. 6.10b). The other curves tend to show greater variability than Mann’s, but still make the late 20th-century temperature increase look ‘unprecedented’. However, all these studies are the work of close associates of Mann and use many of the same temperature proxies (see McIntyre, 2008a). In particular, they use ‘strip-bark’ bristlecone and foxtail samples, even though the 2006 NRC panel advised that strip-bark samples should be avoided as they were not reliable temperature proxies, being very sensitive to precipitation. AR4 completely ignores the Wegman report, which highlighted the lack of independence between the various temperature reconstructions cited in support of the hockey stick. The IPCC (2007, SPM) uses the ‘spaghetti’ diagram to justify the claim that ‘Average Northern Hemisphere temperatures during the second half of the 20th century were very likely higher than during any other 50-year period in the last 500 years and likely the highest in at least the past 1,300 years’. There are many climate reconstructions showing that modern warming is by no means ‘unprecedented’, but the IPCC simply ignores them.

Another spaghetti diagram from AR4 is shown below (IPCC, 2007, box 6.4, fig. 1).

Fig. 4.3 The ‘W USA’ curve is none other than Mann’s hockey stick (principal component 1), which the NRC and Wegman reports proved to be based on biased and flawed methodology (climateaudit.org/?p=1506). Steve McIntyre’s critical comments on this spaghetti diagram, as IPCC reviewer, and the IPCC’s disingenuous responses can be found here: climateaudit.org/?p=2245.

David Holland (2007) writes:

While no ‘hockey stick’ made it into the SPM [Summary for Policymakers] this time, the ‘hockey team’ did a good job in ensuring that the UK Government’s wishes were met, resisting the inclusion in the technical reports of most of the serious doubts over the integrity of some of the key studies that are used to support the hypothesis of anthropogenic global warming. That the ‘hockey stick’ should have been so comprehensively invalidated by two highly qualified, independent, peer reviewed studies and public hearings, and yet is retained in any guise by the IPCC in its latest AR4 report, indicates how insular and unscientific a body the IPCC has become. (p. 980)

Michael Mann et al. (2008) recently published a new ‘improved’ version of the hockey stick. They claim that if tree-ring proxies are included, late 20th-century warming in the northern hemisphere is ‘unprecedented’ in the last 1700 years, and if tree rings are left out, it is still ‘unprecedented’ in the last 1300 years. This ‘finding’ was broadcast around the world with great fanfare. But once again, Mann’s methods do not stand up to close scrutiny (http://noconsensus.wordpress.com 1, 2, 3; climateaudit.org 1, 2). He begins with 1357 would-be proxies; they show no consistent signal, and if averaged together they produce a wiggly line with no clear overall trend. So Mann subjects them to ‘screening’ (cherry-picking), and retains only 484 for use in the final reconstruction. No effort is made to check whether the proxies correlate with local temperature. Instead, those that show a strong upward trend in recent times – as a small minority do – are automatically selected and given great weight. Mann even extends proxies into more recent times by inventing (‘extrapolating’) data, using a method that tends to create an uptick similar to that of the instrumental record. The inevitable result is a hockey-stick type of graph – and one in which the ‘blade’ is based on less than 5% of the data (http://noconsensus.wordpress.com 1, 2). But few scientists seem to care that Mann’s results are again based on flawed statistical procedures, as his message of ‘unprecedented’ recent warming is what a great many people want to hear.

Fig. 4.4 The hockey stick reborn. Mann calls his latest statistical technique EIV (‘error in variables’); the green curve is for land and the black one for land+oceans. CPS (‘composite and scale’) is another of his techniques. Note how temperature records seem to have ended in 2000.

Divergence and non-disclosure

It has been known for over a decade that many tree-ring series which correlate with the temperature rise from 1900 to 1960 ‘diverge’ thereafter, indicating falling temperatures rather than rising temperatures. This is known as the ‘divergence problem’ and is a major unresolved problem in temperature reconstructions based on tree rings. If tree-ring series fail to show modern warming, how can we be sure that their failure to show significant warm periods in the distant past reflects reality? The only conclusion possible at present is that tree rings should not be used for temperature reconstructions.

The lead author of the palaeoclimate chapter in the TAR in which Mann’s hockey stick was featured was none other than Michael Mann himself. One of the lead authors of the same chapter in AR4 is Keith Briffa, who has likewise promoted his own work. He allowed the post-1960 data from one of his graphs included in AR4 (and previously in the TAR) to be chopped off, because the curve went down instead of up. As reviewer of the chapter concerned, Steve McIntyre asked the IPCC to restore the deleted portion, but it refused, saying that this would be ‘inappropriate’. David Holland (2007) quotes further examples of how, in the face of critical reviews, the IPCC refused to present an honest account of the divergence problem in AR4, and then adds:

These breathtaking examples show that the IPCC panel is unable to grasp the obvious nonsense of a claim that historic reconstructions are evidence that one period is warmer than another when the reconstructions cited cannot replicate the instrumental record of the actual decades which they are alleging to be warmer. The evidence would be speculative if the divergence problem was unknown, but knowing it makes the evidential value of the reconstructions nil. (p. 970)

In producing his latest version of the hockey stick, Mann, too, deletes Briffa’s post-1960 tree-ring data, and then proceeds to substitute ‘infilled’ (imaginary) data that don’t suffer from the divergence problem (climateaudit.org/?p=3747)!

Another major problem with tree-ring reconstructions is the inconsistency between older and more recent versions of bristlecone-ring series from key sites, notably Tornetrask (Sweden), Polar Urals, and Sheep Mountain (California). If tree-ring width is assumed to represent temperature, the medieval period (11th century) in the updated versions turns out to be at least as warm as the 20th century, whereas in the older versions the 20th century is far warmer. Because the older tree-ring data from these three sites play a central role in IPCC-favoured temperature reconstructions, using the new data would have a drastic impact on 9 out of 10 reconstructions in AR4 (McIntyre, 2008b). Yet the IPCC doesn’t even mention the updated versions, let alone discuss the implications of the discrepancies for its bold assertion that recent warming is ‘likely’ unprecedented in the past 1300 years. In short, the paleoclimatology chapter of AR4 is a perfect illustration of the IPCC’s bias, lack of integrity and lack of credibility.

The task of checking the temperature reconstructions used by the IPCC is made very difficult by the frequent refusal of the scientists concerned to disclose their data and methodology; journals and funding agencies seem unwilling to enforce their own rules requiring the archiving of data, and the IPCC, too, brings no pressure to bear, despite its own rules on transparency and openness. Requests under the Freedom of Information Act have led to the release of some material, but very grudgingly and often in incomplete form. Phil Jones, head of the CRU, justified his refusal to release data behind a key article cited by the IPCC as follows: ‘We have 25 or so years invested in the work. Why should I make the data available to you, when your aim is to try and find something wrong with it?’ This ridiculous, unscientific mentality is widespread among leading climate scientists.

David Holland (2007) presents 10 of the 70 reviewers’ comments and IPCC responses on the paragraph in AR4 that defends the hockey stick against the criticisms of McIntyre and McKitrick. He says that the material demonstrates the ‘arrogance and bias’ of those responsible for the published text, which contains several demonstrably false statements. The internal IPCC discussions that led to the responses to criticisms and to the final text have not been made public. Holland writes:

There is no evidence, as yet disclosed, to say how the omission of inconvenient data from the spaghetti diagram was agreed or the ‘hockey stick’ paragraph was decided against the many protesting comments and the well documented and peer reviewed studies initiated by the US House of Representatives. ...

[D]espite its stated clear responsibility to make available to critical reviewers all the data and methodology of the science it reviews, the IPCC does not, and while the various national academies of science also exhort scientists to follow best practice, there is no enforcement. (pp. 975-6)

Apparently stung by the adverse publicity generated by the forensic work of Steve McIntyre and others, members of the ‘hockey team’ have recently launched a ‘Paleoclimate Reconstruction Challenge’, to address problems with different methods of reconstructing past climates. At a closed meeting in May 2008, Keith Briffa and Ed Cook presented a paper on sources of uncertainty in tree-ring data. The paper is available online, but it might have been put there by mistake. For in it, Briffa, an AR4 lead author, honestly admits to key problems that were denied in AR4 and in his own responses to review criticisms by McIntyre and McKitrick (climateaudit.org/?p=3260). As McIntyre comments: ‘What a fiasco.’

LIA and MWP

The overall trend in global temperature has been upwards for about 400 years, since the lowest point of the Little Ice Age (LIA). In many parts of the world the LIA (c. 1300-1900) was the coldest period of the current interglacial (the Holocene), and was not caused by any drop in atmospheric CO2 concentration. It was characterized by bitter winters, advancing glaciers, short growing seasons, and famines.

Fig. 4.5 ‘Hunters in the snow’, an imaginary landscape painted by Pieter Bruegel

the Elder in February 1565, during a harsh winter. (cfs.tistory.com)

Fig. 4.6 The River Thames in central London in 1677, showing stranded ice floes. Painted by Abraham Hondius (wikipedia.org). During the LIA the River Thames regularly froze in winter, and fairs complete with swings, sideshows and food stalls were held on the ice.

The LIA was preceded by the Medieval Warm Period (MWP) or Medieval Climate Optimum (c. 800-1300 AD), a time of flourishing agriculture, expanding population and increased prosperity, according to historical records. The hockey stick sought to abolish the MWP as it undermines the claim that modern temperatures are in any way unusual, unnatural or ‘unprecedented’. It was during the MWP that Iceland and Greenland were settled by the Vikings. No significant ice was reported in Iceland until after 1200, and grain continued to be grown there until the late 1500s. In Greenland, the settlers were able to grow cereals and rear sheep and cattle – something not possible today. By 1300 more than 3000 colonists lived on 300 farms scattered along the west coast. Thereafter, the climate began to deteriorate. Stock rearing became unreliable, crops failed and the settlements were cut off from the outside world by sea ice for years at a time. By the end of the 15th century the settlers in Greenland had been wiped out and those in Iceland were struggling to survive in the face of crop failures and a collapsing fishing industry (env.leeds.ac.uk, sunysuffolk.edu). Despite the 20th-century warming, the Viking graveyard at Hvalsey, Greenland, is still under permafrost.

AGW proponents often insist that the MWP was restricted to parts of the northern hemisphere (mainly Europe and the North Atlantic), despite abundant evidence to the contrary. Local temperature and temperature-correlated records from about 100 locations around the world indicate that the vast majority of locations experienced both the Medieval Warm Period and the Little Ice Age, and most did not experience unprecedented warming during the 20th century (Robinson et al., 2007).

Studies in California have found evidence that trees grew at higher altitudes in the MWP, and that the temperature there must have been 3.2°C warmer than today. Similar evidence shows that annual and summer temperatures in the Polar Urals were 1.5 and 2.3°C warmer respectively during the MWP. Steve McIntyre asked the IPCC to include this information in AR4, but they refused. Researchers have concluded from the changing content of sediments in the Venezuelan Alps that the glaciers did not exist in the MWP and that temperatures there declined by 2.6-4.3°C between the MWP and the Little Ice Age (McIntyre, 2008a,b).

The MWP was preceded by the cool Dark Ages, when desperate Germanics sacked Rome, and this in turn was preceded by the Roman Warm Period (c. 250 BC - 100 AD), when grape-growing advanced northward in both Italy and Britain. Even earlier, there had been the Minoan Warm Period (c. 1400 BC - 1200 BC). Evidence for these alternating periods of warming and cooling comes from abundant historical data, and from numerous analyses of palaeo-temperatures (Singer & Avery, 2007) – which the IPCC chooses to exclude.

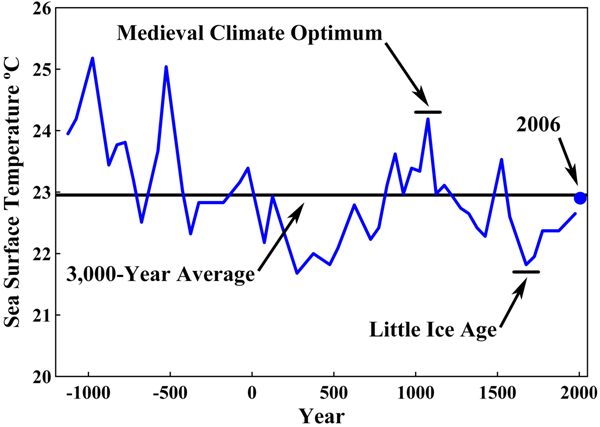

Fig. 4.7 Surface temperatures in the Sargasso Sea, as determined by isotope ratios of

marine organism remains in sediment at the bottom of the sea (Robinson et al., 2007).

Craig Loehle recently published a temperature reconstruction for the past 2000 years which, unlike all the reconstructions used by the IPCC, avoids tree-ring data altogether (Loehle, 2007; Loehle & McCulloch, 2008). He took this step because of the clear evidence that tree-ring width does not respond linearly to temperature. The main problems are that tree-ring width may rise with temperature to some optimal level, and then decrease with further temperature increases, and it also responds to precipitation changes, variations in atmospheric pollution, diseases, pest outbreaks, and fertilization by rising CO2 levels. His study used 18 non-tree-ring proxy series published in the literature and calibrated to local temperature, including borehole temperature measurements, pollen remains, oxygen isotope data, and diatoms deposited on lake bottoms. The resulting reconstruction clearly shows the MWP and LIA.

Fig. 4.8 Non-tree-ring temperature reconstruction with 95% confidence intervals. The Medieval Warm Period was significantly warmer than the 2000-year average during most of the period 820-1040 AD. The Little Ice Age was significantly cooler than the average during most of 1440-1740 AD. The last point on the graph represents the 29-year average temperature centred on 1935. Even after adding the (probably inflated) GISS temperature increase since then of 0.34°C, the MWP remains marginally above the temperature at the end of the 20th century. (Loehle & McCulloch, 2008)

Note that the reason the ‘hockey team’ favours tree rings is not because these data always fail to show a significant MWP; some tree-ring series do show a warm MWP, but ‘the team’ ignores them (McIntyre, 2008a,b). Some authors simply withhold the adverse data – e.g. G. Jacoby, who only archived 10 tree-line series that suited him and discarded the 26 series that didn’t on the grounds that they ‘obviously’ weren’t sensitive to temperature (climateaudit.org/?p=2920). Such is the sorry state of this field of climate ‘science’.

Receding glaciers often yield evidence showing that modern glacier recession is not unprecedented even within the Holocene. For instance, since the ice melted in the Schnidejoch Pass in the Swiss Alps in the summer of 2003, hundreds of archaeological relics have been recovered, dating from the Neolithic Era, the Bronze Age, the Roman period and medieval times, a timespan of 5000 years. They tell of repeated alternations between warm periods when the pass was open and provided a quick route across the Alps, and cold periods when it was shut by the ice. The youngest item is part of a shoe dating from the 14th or 15th century, at the end of the Medieval Warm Period. The Schnidejoch was then blocked by glaciers during the Little Ice Age, until it became ice-free again early in the 21st century (news.bbc.co.uk).

Fig. 4.9 Top: The Susten Pass in the Alps in 1993. The two dotted lines show how far the glacier extended in 1856, while the dashed line shows how far it extended in 1922. Bottom: The same pass as it probably looked about 2000 years ago in Roman times, based on archaeological evidence. (climateaudit.org/?p=772)

Since 1984, hundreds of researchers have amassed a wealth of evidence from around the world showing that for at least the last several hundred thousand years, an approx. 1500-year (±500 years) warm-cold cycle has been superimposed over the longer, stronger ice ages and interglacial phases (Singer & Avery, 2007). Evidence for these ‘Bond events’ (as the Holocene events are called) or ‘Dansgaard-Oeschger events’ comes from ice and sediment cores, ancient tree rings, stalagmites, etc. The temperature swings are relatively mild, but can reach over 3°C above and below the mean in high latitudes.

Temperatures during most of the last 1.6 million years (the Pleistocene) were colder than today, and continental glaciers up to 5 km thick repeatedly covered much of North America, Europe and Asia. Long glacial periods alternated with short (10-30,000 year) interglacials. The alternation from one to the other is usually attributed mainly to changes in the earth’s orbital parameters: the precessional ‘wobble’ of its axis, variations in axial tilt, and variations in orbital eccentricity.

Fig. 5.1 The historical cycle of warm and cold periods during the last 400,000 years, based on ice core samples from Vostok, Antarctica. (energytribune.com)

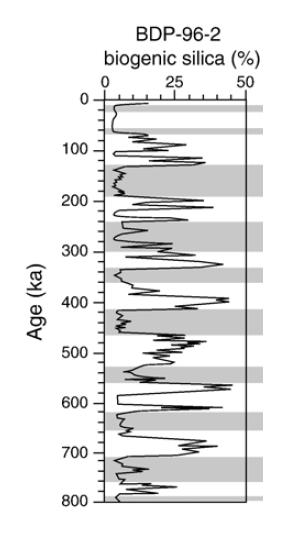

Fig. 5.2 The climate of the past 800,000 years reconstructed from diatoms in sediments from Lake Baikal, Russia (Mackay, 2007). It shows that many periods in the past were much warmer than today. Though not visible in this graph, the data show a warm period between 850 and 1200 AD, and that modern warming in this region began about 250 years ago.

The current interglacial (the Holocene) began about 11,600 years ago and is already longer than several previous interglacials. The figure below shows the warming at the end of the last ice age, and that for 7000 of the last 10,000 years the mean planetary temperature was more than 0.5°C warmer than today.

Fig. 5.3 Reconstruction based on temperature measurements in more than 6000 boreholes around the world.

(ncpa.org/ba/ba337/ba337.html)

The graph below shows how the present can be considered to be part of either a warming or a cooling trend depending on the period considered.

Fig. 5.4 (atomic-fungus.livejournal.com/2007/12/07)

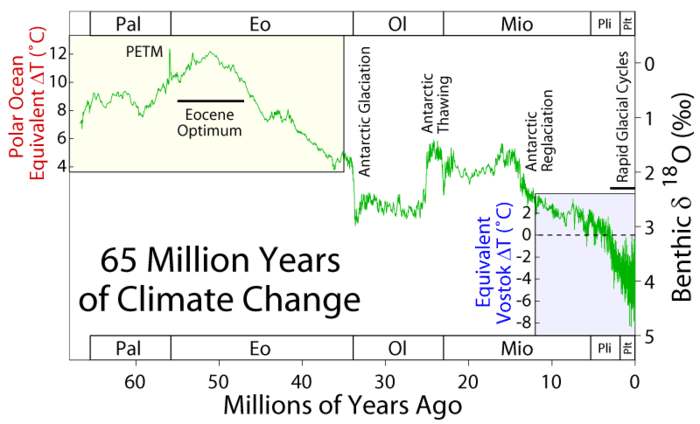

A survey of the earth’s climate over even vaster spans of geologic time helps to put modern climate change even more firmly into perspective. Although such climate reconstructions are subject to many uncertainties, the general picture is clear. For most of the earth’s history, the globe has been warmer than it has been for the last 200 years; it has rarely been cooler.

Fig. 5.5 Reconstruction of climate change over the last 5 million years by Lisiecki and Raymo (2005), based on oxygen isotope measurements from deep-sea sediment cores. The isotope variations reflect local temperature and global changes associated with the extent of continental glaciation. The variations are very similar in shape to the temperature variations recorded at Vostok, Antarctica, covering the last 420,000 years. The horizontal baseline represents the temperature around 1950. (globalwarmingart.com)

Fig. 5.6 Climate change over the last 65 million years (comprising the Paleocene, Eocene, Oligocene, Miocene, Pliocene and Pleistocene). The graph is based on oxygen isotope measurements. PETM = Paleocene-Eocene Thermal Maximum. (globalwarmingart.com)

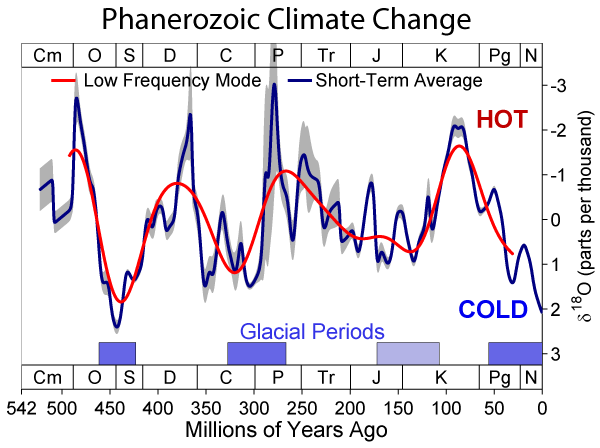

Fig. 5.7 Climate change during the Phanerozoic eon (the last 540 million years), based on variations in oxygen isotope ratios. The Phanerozoic comprises the Cambrian, Ordovician, Silurian, Devonian, Carboniferous, Permian, Triassic, Jurassic, Cretaceous, Paleogene (Pal, Eo, Ol) and Neogene (Mio, Pli, Plt). (globalwarmingart.com)

The fact that the earth’s climate has been relatively stable during the Phanerozoic, with no ‘run-away’ greenhouse or icehouse effects, indicates that the climate is self-regulatory, thanks to strong negative (attenuating) feedbacks mainly involving the hydrological cycle (clouds, water vapour and precipitation). The notion that we live on a ‘delicate blue planet’ prone to catastrophic ‘tipping points’ is a fable.

Climate Change Controversies: Part 2

Climate Change Controversies: Contents